I can never remember how to do this, and it's not that easy to find, unfortunately:

ec2-describe-images -o amazon --filter "image-type=kernel" ec2-describe-images -o amazon --filter "image-type=ramdisk"

I can never remember how to do this, and it's not that easy to find, unfortunately:

ec2-describe-images -o amazon --filter "image-type=kernel" ec2-describe-images -o amazon --filter "image-type=ramdisk"

Jim Halberg (who starred in such films as Gladys the Groovy Mule and The Boatjacking of Supership '79), has ported Growl4Rails over to jQuery for your jQuerying pleasure.

https://github.com/jimhalberg/growl4rails

Thanks Jim, and enjoy!

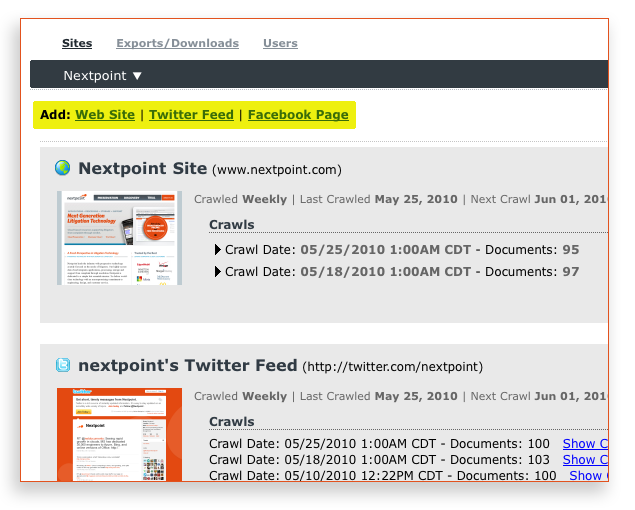

A couple months ago I suggested that I would start blogging more after getting rid of Twitter and Facetube, but wasn't really able to deliver on that promise because we've been working on something really big at the Nextpoint Lab. Now, I'm real excited that we can let the cat out of the bag and talk about what that is.

![]()

This product we've been working full steam on and now (finally) happy to be announcing in an open beta program is called CloudPreservation. And what it provides is a web-based service that automatically crawls your web properties at chosen intervals, building an archive of html source code and resources, high quality snapshots, and a robust full-text search index. The service makes it a breeze to go back in time with all of your web sites, blogs, Facebook fan pages, and Twitter accounts to search content, preview the site, and export data.

There's a bunch of reasons why organizations need this service, foremost being regulatory and legal compliance. But another huge one that I think affects this group of folks is backup and business/site continuity, and this works great for keeping a historical archive of the content and (coming soon) images and resources (javascript/css) files from your site.

Just like our other products, we're leveraging cloud computing to do the crawling and imaging of these sites, so we're able to scale this out enorumously (and I really mean infinitely) at extremely affordable prices. There's a 30 day free trial, and we've got pricing plans that should suit the needs of any personal blogger who wants some piece of mind, or a huge corporation who can finally be compliant with regulations. We've even got a free plan for those that can get away with it.

We want you to give it a spin. Sign up for a 30-day trial of one of our paid plans, or just take us to the cleaners and pick the free one :). Since we're in beta, I really need your feedback. I know lots of you are some of the most opinionated and nitpicky folks I know, and I want all the nitpicks and opinions because they're super valuable to me, and can really help improve this much needed service. Feel free to give feedback here on the blog, send me an email, or send an email to our feedback email address.

Thanks so much for giving it a whirl!

This American Life had a great radio show last week about New United Motor Manufacturing, Inc. (NUMMI), which was a joint venture between Toyota and General Motors so that GM could learn the Toyota Production System and so that Toyota could learn how to apply the Toyota Production System in the United States.

It's a fantastic show and if you've read Implementing Lean Software Development: From Concept to Cash from Mary and Tom Poppendieck, I think you'll really get a kick out of it.

Highly recommended.

http://www.thisamericanlife.org/radio-archives/episode/403/nummi

Posted by Jim Fiorato at 7:11 PM 0 comments

Labels: development , process

Just about every week, Ben and I talk about how this is the week we're done with Facebook and Twitter. We talk about how big of a distraction it is, how little great information we get from it, and how things people say can get us worked up for no great reason. Tired of the .02% of your "friends" who just flood your information streams with useless status updates or political rants.

Don't get me wrong, I think that 1-2% of the stuff that I read there is interesting, nice to know or informative. But that's a pretty low hit rate for signal vs. noise.

There's this weird and unhealthy emotional attachment to it, which causes me to never hit the off button. Sometimes I feel like I've built up this big property, and I'm scared to just let it go (like there's a bunch of other jfiorato's out there waiting in line for that username). Then there's just the fear of missing something important, that I won't see anywhere else, or that I'll be doomed to find out later than everyone else.

But I don't like the way these attachments make me feel. As if we don't have to enough live in fear of these days, fearing that I'm not up to date, or fearing that I'm not marketing myself as well as I could, just isn't necessary anymore. I've just been sick of having so much of my stuff owned by others. Tired of banks owning my shit. Tired of TV owning my CPU cycles. Tired of Facebook and Twitter owning my words and pictures.

I feel like I got so much more value out of reading and writing more than 140 characters, but all these 140 character "efficiencies" have ended up paralyzing me.

But, still, unready to fully commit to anything, to test things out, Ben and I made a pact to not check Twitter or Facebook for 2 weeks (had our wives change our passwords), and then see where we're at then. Maybe after the two weeks, deleting the accounts, or maybe just leaving them there without knowing the password, not sure yet.

So, I'm hoping to pick back up here, and start really writing again.

Posted by Jim Fiorato at 6:04 AM 3 comments

Why is it that development teams seem to rarely ever know what the financials look like of the product they are building? Is it that the company doesn't have that information? Unlikely. Is it that it's sensitive information that shouldn't be passed around to just anyone? Maybe. Is it that the company doesn't want to concern the team with all the gory details? Most likely.

The ultimate goal of the company is to make more money. Yes, please customers, employ more people are there, but the goal is to make more money. If all your team has for determining success is "on-time delivery" and "quality", the team will end up building process and metrics which may hit the nail on the head for "on-time delivery" and "quality", but my be of poor value when it comes to making more money. Things like "on-time delivery" and "quality" are both obviously going be a positive thing, but the company should draw the line between these metrics and the financials with the development team. The team is smart, and the company should let the team have some input on the metrics that result in making more money.

Hiding the financials is hiding score from the team. Like a basketball team that only knew how many times they turned over the ball, or baseball team that only knew how many stolen bases they had.

A company can't pretend that the goal isn't anything other than making more money. The team understands that, and wouldn't mind seeing a big fat chart that correlates the fact that they kicked ass to get a feature out in February and with a big spike in sales in July.

The team has to know what the score is.

Posted by Jim Fiorato at 4:16 AM 0 comments

Labels: development , process , team

Last year I decided it was time to update home theater pc. The old one was still on Windows XP with Windows Media Center 2005. The hardware was old and I wasn't really using it much any more, opting to use my cable box w/ much better high definition support.

So, I decided to do the build this time on my own, and I thought I'd detail it here for reference.

My requirements for the HTPC were:

Scalability always seems to be the poster child for YAGNI. Lately however, the barrier to entry of scaling out is decreasing as the services that provide elastic infrastructures are wildly abundant. Scaling a website is easier now than ever for just about anyone, but for some reason upfront scalability design seems to still be a bit taboo.

I wanted to test how difficult it would be to do upfront scalable implementation, and pay little or nothing until the scaling needed to happen. So, I decided to dive in and see how I would create a site that needed to start with serving a small number of pages, do a little bit of work, and use a little bit of storage, while paying a little bill, but with the ability to scale out the web page serving, the work, and the storage, infinitely.

Recently my Dad was asking how to download all the photos from a set in Flickr so he could burn them to a CD for my grandmother. There's a few desktop apps and Firefox extensions for it, but everything requires you to have to download and install software or use a certain browser. So, I thought a web application that looks at a picture site, and zips up the original images in a set or album and let's the user download them would be a good sample application for this test. You can see the application here.

Posted by Jim Fiorato at 4:56 AM 3 comments

Labels: design , development , scaling